What Does Web Scraping Mean? Web scraping is a term for various methods used to collect information from across the Internet. Generally, this is done with software that simulates human Web surfing to collect specified bits of information from different websites. Those who use web scraping programs. Through our two category-defining products, Active Treatment Essence and award-winning Active Botanical Serum, Vintner’s Daughter delivers nutritional skincare with uncompromising quality and unprecedented performance to reveal your healthiest, most radiant skin. Python is the most popular language for web scraping. It is a complete product because it. Yahoo Finance is a good source for extracting financial data. Check out this web scraping tutorial and learn how to extract the public summary of companies from Yahoo Finance using Python 3 and LXML. Modern Web Scraping with Python using Scrapy Splash Selenium Become an expert in web scraping and web crawling using Python 3, Scrapy, Splash and Selenium 2nd EDITION (2020).

NextStep 2019 was an exciting event that drew professionals from multiple countries and several sectors. One of our most popular technical sessions was on how to scrape website data. Presented by Miguel Antunes, an OutSystems MVP and Tech Lead at one of our partners, Do iT Lean, this session is available on-demand. But, if you prefer to just quickly read through the highlights…keep reading, we’ve got you covered!

As developers, we all love APIs. It makes our lives that much easier. However, there are times when APIs aren’t available, making it difficult for developers to access the data they need. Thankfully, there are still ways for us to access this data required to build great solutions.

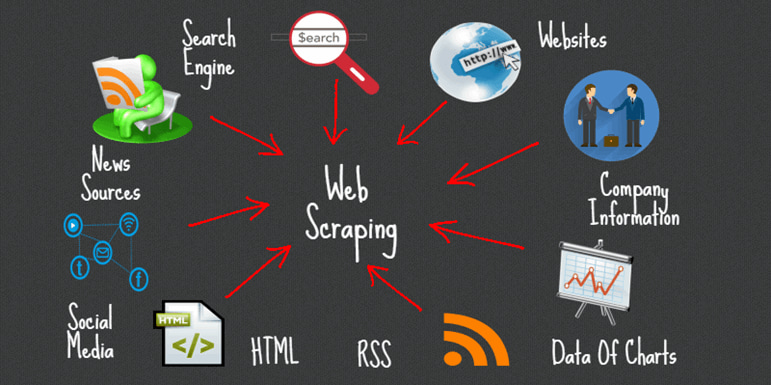

What Is Web Scraping?

Web scraping is the act of pulling data directly from a website by parsing the HTML from the web page itself. It refers to retrieving or “scraping” data from a website. Instead of going through the difficult process of physically extracting data, web scraping employs cutting-edge automation to retrieve countless data points from any number of websites.

If a browser can render a page, and we can parse the HTML in a structured way, it’s safe to say we can perform web scraping to access all the data.

Benefits of Web Scraping and When to Use It

You don’t have to look far to come up with many benefits of web scraping.

- No rate-limits: Unlike with APIs, there aren’t any rate limits to web scraping. With APIs, you need to register an account to receive an API key, limiting the amount of data you’re able to collect based on the limitations of the package you buy.

- Anonymous access: Since there’s no API key, your information can’t be tracked. Only your IP address and cool keys can be tracked, but that can easily be fixed through spoofing, allowing you to remain perfectly anonymous while accessing the data you need.

- The data is already available: When you visit a website, the data is public and available. There are some legal concerns regarding this, but most of the time, you just need to understand the terms and conditions of the website you’re scraping, and then you can use the data from the site.

How to Web Scrape with OutSystems: Tutorial

Regardless of the language you use, there’s an excellent scraping library that’s perfectly suited to your project:

- Python: BeautifulSoup or Scrapy

- Ruby: Upton, Wombat or Nokogiri

- Node: Scraperjs or X-ray

- Go: Scrape

- Java: Jaunt

OutSystems is no exception. Its Text and HTML Processing component is designed to interpret the text from the HTML file and convert it to an HTML Document (similar to a JSON object). This makes it possible to access all the nodes.

It also extracts information from plain text data with regular expressions, or from HTML with CSS selectors. You’ll be able to manipulate HTML documents with ease while sanitizing user input against HTML injection.

But how does web scraping look like in real life? Let’s take a look at scraping an actual website.

We start with a simple plan:

- Pinpoint your target: a simple HTML website;

- Design your scraping theme;

- Run and let the magic happen.

Scraping an Example Website

Our example website is www.bank-code.net, a site that lists all the SWIFT codes from the banking industry. There’s a ton of data here, so let’s get scraping.

This is what the website looks like:

If you want to collect these SWIFT codes for an internal project, it will take hours to copy it manually. With scraping, extracting the data will take a fraction of that time.

- Navigate to your OutSystems personal environment, and start a new app (if you don't have one yet, sign-up for OutSystems free edition);

- Choose “Reactive App”;

- Fill in your app’s basic information, including its name and a description of the app to continue;

- Click on “Create Module”;

- Reference the library you’re going to use from the Forge component, which in this case is the “Text and HTML Processing” library;

- Go to the website and copy the URL, for example: https://bank-code.net/country/PORTUGAL-%28PT%29/100. We’re going to use Portugal as a baseline for this tutorial;

- In the OutSystems app, create a REST API for integration with the website. It’s basically just a “get request”, and place the copied URL;

- If you noticed we have the pagination offset already present in the URL, it’s the “/100” part. Change that to be a REST input parameter;

- Out of our set of actions, we’ll use the ones designed to work with HTML, which in this case, are Attributes or Elements. We can send the HTML text of the website to these actions. This will return our HTML document, the one mentioned before that looks like a JSON object where you can access all the nodes of the HTML.

Now we can create our action to scrape the website. Let’s call it “Scrape”, for example.

- Use the endpoint previously created, which will gather the HTML. We’ll parse this HTML text into our document;

- Going back to the website, in Chrome, right-click on the page where the content is that you’d like scraped. Click on “Inspect” and in the subsequent section, identify the table you’d like to scrape;

- Since the table has its own ID, it will be unique across the HTML text, making it easy to identify in the text;

- Since we now have the table, we really want to get all the rows in this table. You can easily identify the selector for the row by expanding the HTML till you see the rows and right click in one of them - Copy - Copy Selector, and this will give you “#tableID > tbody > tr:nth-child(1)” for the first row. And since we want all of them, we’re going to use “#tableID > tbody > tr”;

- You have now all the elements for the table rows. It’s time to iterate all rows and get to select all the columns;

- Now, select the column’s text, using the HTML document and the Selector from the last action, in addition to our column selector: “> td:nth-child(2)” is the selector for the second column which contains the Bank Name. For the other columns, you just need to iterate the “child(n)” node.

Since you have scraped all the information, check if you already have the code on our database. If we have it, we just need to update the data. If we don’t have it, we’ll just create the record. This should provide us with all the records for the first page of the website when you hit 1-Click Publish.

The process above is basically our tool for parsing the data from the first page. We identify the site, identify the content that we want, and identify how to get the data. This runs all the rows of the table and parses all the text from the columns, storing it in our database.

For the full code used in this example, you can go to the OutSystems Forge and download it from there.

Web Scraping Enterprise Scale: Real-Life Scenario - Frankort & Koning

So, you may think that this was a nice and simple example of scraping a website, but how can you apply this at the enterprise level? To illustrate this tool’s effectiveness at an enterprise-level, we’ll use a case study of Frankort & Koning, a company we did this for.

Frankort & Koning is a Netherlands-based fresh fruit and vegetable company. They buy products from producers and sell them to the market. As these products trade in fresh produce, there are many regulations that regulate their industry. Frankfort & Koning needs to check each product that they buy to resell.

Imagine how taxing it would be to check each product coming into their warehouse to make sure that all the producers and their products are certified by the relevant industry watchdog. This needs to be done multiple times per day per product.

GlobalGap has a very basic database, which they use to give products a thirteen-digit GGN (Global Gap Number). This number identifies the producer, allowing them to track all the products and determine if they're really fresh. This helps Frankort & Koning certify that the products are suitable to be sold to their customers. Since Global Gap doesn't have any API to assist with this, this is where the scraping part comes in.

To work with the database as it is now, you need to enter the GGN number into the website manually. Once the information loads, there will be an expandable table at the bottom of the page. Clicking on the relevant column will provide you with the producer’s information and whether they’re certified to sell their products. Imagine doing this manually for each product that enters the Frankort & Koning warehouse. It would be totally impractical.

How Did We Perform Web Scraping for Frankort & Koning?

We identified the need for some automation here. Selenium was a great tool to set up the automation we required. Selenium automates user interactions on a website. We created an OutSystems extension with Selenium and Chrome driver.

This allowed Selenium to run Chrome instances on the server. We also needed to give Selenium some instructions on how to do the human interaction. After we took care of the human interaction aspect, we needed to parse the HTML to bring the data to our side.

The instructions Selenium needed to automate the human interaction included identifying our base URL and the 'Accept All Cookies' button, as this button popped up when opening the website. We needed to identify that button so that we could program a click on that button.

We also needed to produce instructions on how to interact with the collapse icon on the results table and the input where the GGN number would be entered into. We did all of this to run on an OutSystems timer and ran Chrome in headless mode.

We told Selenium to go to our target website and find the cookie button and input elements. We then sent the keys, as the user entered the GGN number, to the system and waited a moment for the page to be rendered. After this, we iterated all the results, and then output the HTML back to the OutSystems app.

This is how we tie together automation and user interaction with web scraping.

These are the numbers we worked with, with Frankort & Koning:

- 700+ producers supplying products

- 160+ products provided each day

- 900+ certificates - the number of checks they needed to perform daily

- It would’ve taken about 15 hours to process this information manually

- Instead, it took only two hours to process this information automatically

This is just one example of how web scraping can contribute to bottom-line savings in an organization.

Still Got Questions?

Just drop me a line! And in the meantime, if you enjoyed my session, take a look at the NextStep 2020 conference, now available on-demand, with more than 50 sessions presented by thought leaders driving the next generation of innovation.

Related posts

Web scraping, web crawling, html scraping, and any other form of web data extraction can be complicated. Between obtaining the correct page source, to parsing the source correctly, rendering javascript, and obtaining data in a usable form, there’s a lot of work to be done. Different users have very different needs, and there are tools out there for all of them, people who want to build web scrapers without coding, developers who want to build web crawlers to crawl large sites, and everything in between. Here is our list of the 10 best web scraping tools on the market right now, from open source projects to hosted SAAS solutions to desktop software, there is sure to be something for everyone looking to make use of web data!

1. Scraper API

Website: https://www.scraperapi.com/

Who is this for: Scraper API is a tool for developers building web scrapers, it handles proxies, browsers, and CAPTCHAs so developers can get the raw HTML from any website with a simple API call.

Why you should use it: Scraper API is a tool for developers building web scrapers, it handles proxies, browsers, and CAPTCHAs so developers can get the raw HTML from any website with a simple API call. It doesn’t burden you with managing your own proxies, it manages its own internal pool of over a hundreds of thousands of proxies from a dozen different proxy providers, and has smart routing logic that routes requests through different subnets and automatically throttles requests in order to avoid IP bans and CAPTCHAs. It’s the ultimate web scraping service for developers, with special pools of proxies for ecommerce price scraping, search engine scraping, social media scraping, sneaker scraping, ticket scraping and more! If you need to scrape millions of pages a month, you can use this form to ask for a volume discount.

2. ScrapeSimple

Website: https://www.scrapesimple.com

Who is this for: ScrapeSimple is the perfect service for people who want a custom scraper built for them. Web scraping is made as simple as filling out a form with instructions for what kind of data you want.

Why you should use it: ScrapeSimple lives up to its name with a fully managed service that builds and maintains custom web scrapers for customers. Just tell them what information you need from which sites, and they will design a custom web scraper to deliver the information to you periodically (could be daily, weekly, monthly, or whatever) in CSV format directly to your inbox. This service is perfect for businesses that just want a html scraper without needing to write any code themselves. Response times are quick and the service is incredibly friendly and helpful, making this service perfect for people who just want the full data extraction process taken care of for them.

3. Octoparse

Best Way To Web Scrape A Website

Website: https://www.octoparse.com/

Who is this for: Octoparse is a fantastic tool for people who want to extract data from websites without having to code, while still having control over the full process with their easy to use user interface.

Why you should use it: Octoparse is the perfect tool for people who want to scrape websites without learning to code. It features a point and click screen scraper, allowing users to scrape behind login forms, fill in forms, input search terms, scroll through infinite scroll, render javascript, and more. It also includes a site parser and a hosted solution for users who want to run their scrapers in the cloud. Best of all, it comes with a generous free tier allowing users to build up to 10 crawlers for free. For enterprise level customers, they also offer fully customized crawlers and managed solutions where they take care of running everything for you and just deliver the data to you directly.

4. ParseHub

Website: https://www.parsehub.com/

Who is this for: Parsehub is an incredibly powerful tool for building web scrapers without coding. It is used by analysts, journalists, data scientists, and everyone in between.

Why you should use it: Parsehub is dead simple to use, you can build web scrapers simply by clicking on the data that you want. It then exports the data in JSON or Excel format. It has many handy features such as automatic IP rotation, allowing scraping behind login walls, going through dropdowns and tabs, getting data from tables and maps, and much much more. In addition, it has a generous free tier, allowing users to scrape up to 200 pages of data in just 40 minutes! Parsehub is also nice in that it provies desktop clients for Windows, Mac OS, and Linux, so you can use them from your computer no matter what system you’re running.

5. Scrapy

Website: https://scrapy.org

Who is this for: Scrapy is a web scraping library for Python developers looking to build scalable web crawlers. It’s a full on web crawling framework that handles all of the plumbing (queueing requests, proxy middleware, etc.) that makes building web crawlers difficult.

Why you should use it: As an open source tool, Scrapy is completely free. It is battle tested, and has been one of the most popular Python libraries for years, and it’s probably the best python web scraping tool for new applications. It is well documented and there are many tutorials on how to get started. In addition, deploying the crawlers is very simple and reliable, the processes can run themselves once they are set up. As a fully featured web scraping framework, there are many middleware modules available to integrate various tools and handle various use cases (handling cookies, user agents, etc.).

6. Diffbot

Best Way To Web Scrape

Website: https://www.diffbot.com

Who is this for: Enterprises who who have specific data crawling and screen scraping needs, particularly those who scrape websites that often change their HTML structure.

Best Way To Web Scrape Photos

Why you should use it: Diffbot is different from most page scraping tools out there in that it uses computer vision (instead of html parsing) to identify relevant information on a page. This means that even if the HTML structure of a page changes, your web scrapers will not break as long as the page looks the same visually. This is an incredible feature for long running mission critical web scraping jobs. While they may be a bit pricy (the cheapest plan is $299/month), they do a great job offering a premium service that may make it worth it for large customers.

Best Way To Block Web Scraper

7. Cheerio

Website: https://cheerio.js.org

Who is this for: NodeJS developers who want a straightforward way to parse HTML. Those familiar with jQuery will immediately appreciate the best javascript web scraping syntax available.

Why you should use it: Cheerio offers an API similar to jQuery, so developers familiar with jQuery will immediately feel at home using Cheerio to parse HTML. It is blazing fast, and offers many helpful methods to extract text, html, classes, ids, and more. It is by far the most popular HTML parsing library written in NodeJS, and is probably the best NodeJS web scraping tool or javascript web scraping tool for new projects.

8. BeautifulSoup

How To Web Scrape Python

Website: https://www.crummy.com/software/BeautifulSoup/

Who is this for: Python developers who just want an easy interface to parse HTML, and don’t necessarily need the power and complexity that comes with Scrapy.

Why you should use it: Like Cheerio for NodeJS developers, Beautiful Soup is by far the most popular HTML parser for Python developers. It’s been around for over a decade now and is extremely well documented, with many web parsing tutorials teaching developers to use it to scrape various websites in both Python 2 and Python 3. If you are looking for a Python HTML parsing library, this is the one you want.

9. Puppeteer

Website: https://github.com/GoogleChrome/puppeteer

Who is this for: Puppeteer is a headless Chrome API for NodeJS developers who want very granular control over their scraping activity.

Why you should use it: As an open source tool, Puppeteer is completely free. It is well supported and actively being developed and backed by the Google Chrome team itself. It is quickly replacing Selenium and PhantomJS as the default headless browser automation tool. It has a well thought out API, and automatically installs a compatible Chromium binary as part of its setup process, meaning you don’t have to keep track of browser versions yourself. While it’s much more than just a web crawling library, it’s often used to scrape website data from sites that require javascript to display information, it handles scripts, stylesheets, and fonts just like a real browser. Note that while it is a great solution for sites that require javascript to display data, it is very CPU and memory intensive, so using it for sites where a full blown browser is not necessary is probably not a great idea. Most times a simple GET request should do the trick!

10. Mozenda

Website: https://www.mozenda.com/

Who is this for: Enterprises looking for a cloud based self serve webpage scraping platform need look no further. With over 7 billion pages scraped, Mozenda has experience in serving enterprise customers from all around the world.

Why you should use it: Mozenda allows enterprise customers to run web scrapers on their robust cloud platform. They set themselves apart with the customer service (providing both phone and email support to all paying customers). Its platform is highly scalable and will allow for on premise hosting as well. Like Diffbot, they are a bit pricy, and their lowest plans start at $250/month.

Honorable Mention 1. Kimura

Website: https://github.com/vifreefly/kimuraframework

Who is this for: Kimura is an open source web scraping framework written in Ruby, it makes it incredibly easy to get a Ruby web scraper up and running.

Why you should use it: Kimura is quickly becoming known as the best Ruby web scraping library, as it’s designed to work with headless Chrome/Firefox, PhantomJS, and normal GET requests all out of the box. It’s syntax is similar to Scrapy and developers writing Ruby web scrapers will love all of the nice configuration options to do things like set a delay, rotate user agents, and set default headers.

Honorable Mention 2. Goutte

Website: https://github.com/FriendsOfPHP/Goutte

Who is this for: Goutte is an open source web crawling framework written in PHP, it makes it super easy extract data from the HTML/XML responses using PHP.

Why you should use it: Goutte is a very straight forward, no frills framework that is considered by many to be the best PHP web scraping library, as it’s designed for simplicity, handling the vast majority of HTML/XML use cases without too much additional cruft. It also seamlessly integrates with the excellent Guzzle requests library, which allows you to customize the framework for more advanced use cases.

The open web is by far the greatest global repository for human knowledge, there is almost no information that you can’t find through extracting web data. Because web scraping is done by many people of various levels of technical ability and know how, there are many tools available that service everyone from people who don’t want to write any code to seasoned developers just looking for the best open source solution in their language of choice.

Hopefully, this list of tools has been helpful in letting you take advantage of this information for your own projects and businesses. If you have any web scraping jobs you would like to discuss with us, please contact us here. Happy scraping!